I am tutoring some people starting with kubernetes. On their local machines, they're using minikube, and it lets them explore a lot of k8s features without the immediate need of a full-fledged cluster. My students do a lot of research themselves, but occasionally they get back to me with questions they can't seem to find an answer for. In order to not loose those questions, I am writing them down here. I will keep updating this post as they go.

LoadBalancer Services

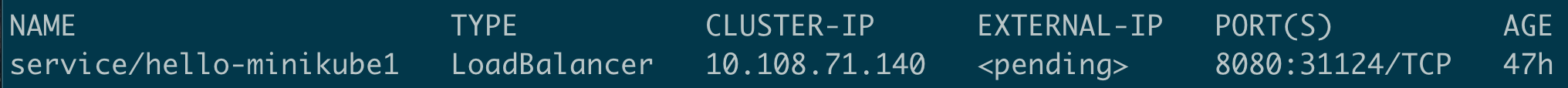

One team member has started to experiment with exposing services running inside her minikube to the outside world. She defined an object of kind Service, and in the spec part, she configured it to be of type LoadBalancer. She was expecting this to eventually assign an external ip. However, the EXTERNAL-IP element remained in state <pending>:

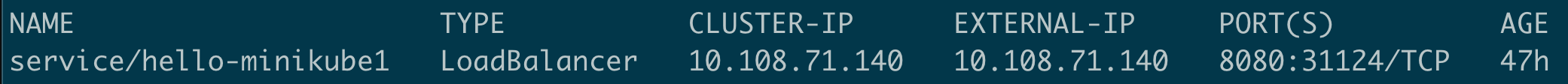

Well, in this case minikube tunnel is your friend. Open another terminal window, execute the "minikube tunnel" command, and voilà:

You now have an external ip (it will go away as soon as you close the tunnel window). Please note that this is specific to the use of minikube. When you're using the managed k8s environment of a public cloud provider such as AWS, Google, Microsoft or DigitalOcean, they will automatically handle everything required for a "real" LoadBalancer Service type.

Minikube DNS

Minikube comes with DNS activated by default. So, most people wont' have to do anything, and it will work out of the box. A team member approached me because he wasn't that lucky. He is using a machine running Mac OS, and is using VirtualBox for virtualisation. In this combination, you need to patch a setting for your virtual machine.

Let's assume your VM is named "minikube" (this is the default, check it via minikube status). Open a terminal window and run the following commands:

minikube stop

VBoxManage modifyvm minikube --natdnshostresolver1 on

minikube start

This will do the trick and make DNS available to all your objects. For those who are curious about the details: you can find them here and here.

Exposing a Minikube Ingress

Next up was the attempt to expose the (training) Minikube so it was - temporarily - accessible from other machines on the local network. As this was not within the scope of the training and was only supposed to be temporary in nature, we used the kubectl port-forward command to accomplish this:

kubectl port-forward \

--address=0.0.0.0 \

--namespace=kube-system \

deployment/ingress-nginx-controller 80:80You should see a response similar to this one here:

Forwarding from 0.0.0.0:80 -> 80As the response indicates, this will forward all traffic coming in via the Minikube host's network adapter on port 80 to the ingress controller listening on port 80 of our Minikube. Whenever an incoming request is handled, you'll see a corresponding log line:

Handling connection for 80Logging

In the pre-k8s era, the team already had been using Elasticsearch and Kibana for centralized logging. The Docker containers (at the time) would then use the fluentd (td-agent) log driver to contact a locally-installed log shipping agent which in turn would send all received messages to the Elasticsearch instance. The team wanted to keep using Elasticsearch/Kibana, so the question was simple how to accomplish this. One of the team members did some digging and came across this article: https://mherman.org/blog/logging-in-kubernetes-with-elasticsearch-Kibana-fluentd/ . Another (similar) post can be found here: https://medium.com/trendyol-tech/forwarding-kubernetes-containers-logs-to-elasticsearch-with-fluent-bit-and-showing-logs-with-411587e54e22